It's impossible to port Animoji to iPad Air

… not because of the TrueDepth camera, but because its GPU and CPU aren’t powerful enough to track faces.

Introduction: you might not need TrueDepth

This article is part 2 of a series on Animoji. Part 1 is here.

When the iPhone X was released last year, reviewers quickly found that Animoji, a feature exclusive to the iPhone X, didn’t actually need the iPhone X’s unique TrueDepth camera. Some people speculated that Apple purposefully limited Animoji to the iPhone X to drive sales for the new device, and that tinkerers would very soon unlock Animoji for all iPhones and iPads.

A year later, nobody’s ported Animoji to any other devices, which surprised me. What’s preventing developers from running Animoji on other devices? Is the TrueDepth required after all, or is something else missing from older devices?

Samsung’s avatars

Samsung’s Galaxy S9, which doesn’t have a depth camera, also came with animated avatars. To see if a depth camera helps tracking, I went to a store to test both Apple’s and Samsung’s respective animated avatars.

Apple’s face tracking is more inclined to keep the left side of the face symmetrical to the right side. If I close only one eye, iPhone X Animoji only half-closes that eye. If I raise one eyebrow, both eyebrows raise up on the avatar. Samsung’s avatars, in contrast, are more lenient towards asymmetrical expressions, allowing me to fully close one eye or raise only one eyebrow. However, this also causes Samsung’s avatars to often show my eyes at different sizes.

Importantly, the tracking fidelity is similar: both devices lose tracking when I turn my head to the side; the iPhone X’s TrueDepth doesn’t help there.

The Samsung Galaxy S9 shows that one doesn’t need a TrueDepth camera to show high quality animated avatars. So what other problems prevents developers from porting Animoji to older iPhones and iPads?

I decided to find out by porting Animoji to my 2013-vintage iPad Air.

Subdivisions

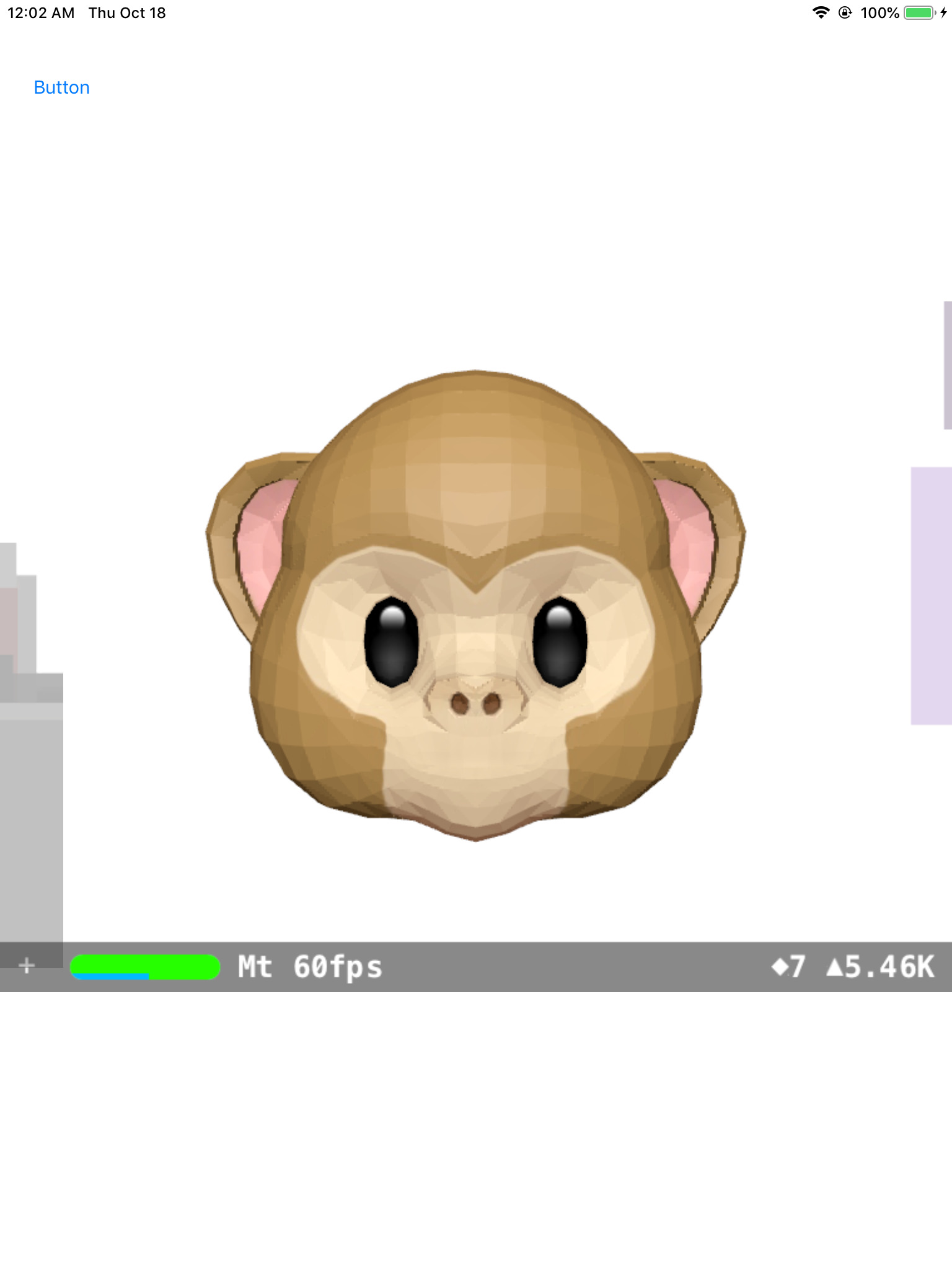

The first problem with Animoji on iPad Air is very easy to spot:

Instead of beautiful characters, I get a mess of glitched coloured squares. It looks like the GPU didn’t like something in the scene.

I tried running the SceneKit renderer in OpenGL ES mode. That didn’t work, because the renderer promptly crashed in a library called OpenSubdiv:

Assertion failed: (0 && “MTLPatchTable Creation Failed”), function Create, file /BuildRoot/Library/Caches/com.apple.xbs/Sources/SceneKit/SceneKit-470/lib/libOsd/sources/opensubdiv/osd/mtlPatchTable.mm, line 97.

Based on some research, OpenSubdiv is a library by Pixar that performs subdivision on 3D models to make them look less blocky.

It looks like this library uses Metal’s GPU acceleration, even when I asked for OpenGL ES, causing a crash in OpenGL ES mode. Maybe it’s similarily unreliable in Metal mode?

To test this, on every frame, I went through the entire SceneKit scene to disable subdivisions on every object:

scene.rootNode.enumerateHierarchy() { node, _ in

guard let geometry = node.geometry else {

return

}

geometry.subdivisionLevel = 0

}

With this change, most Animoji manages to render in a very low-polygon style:

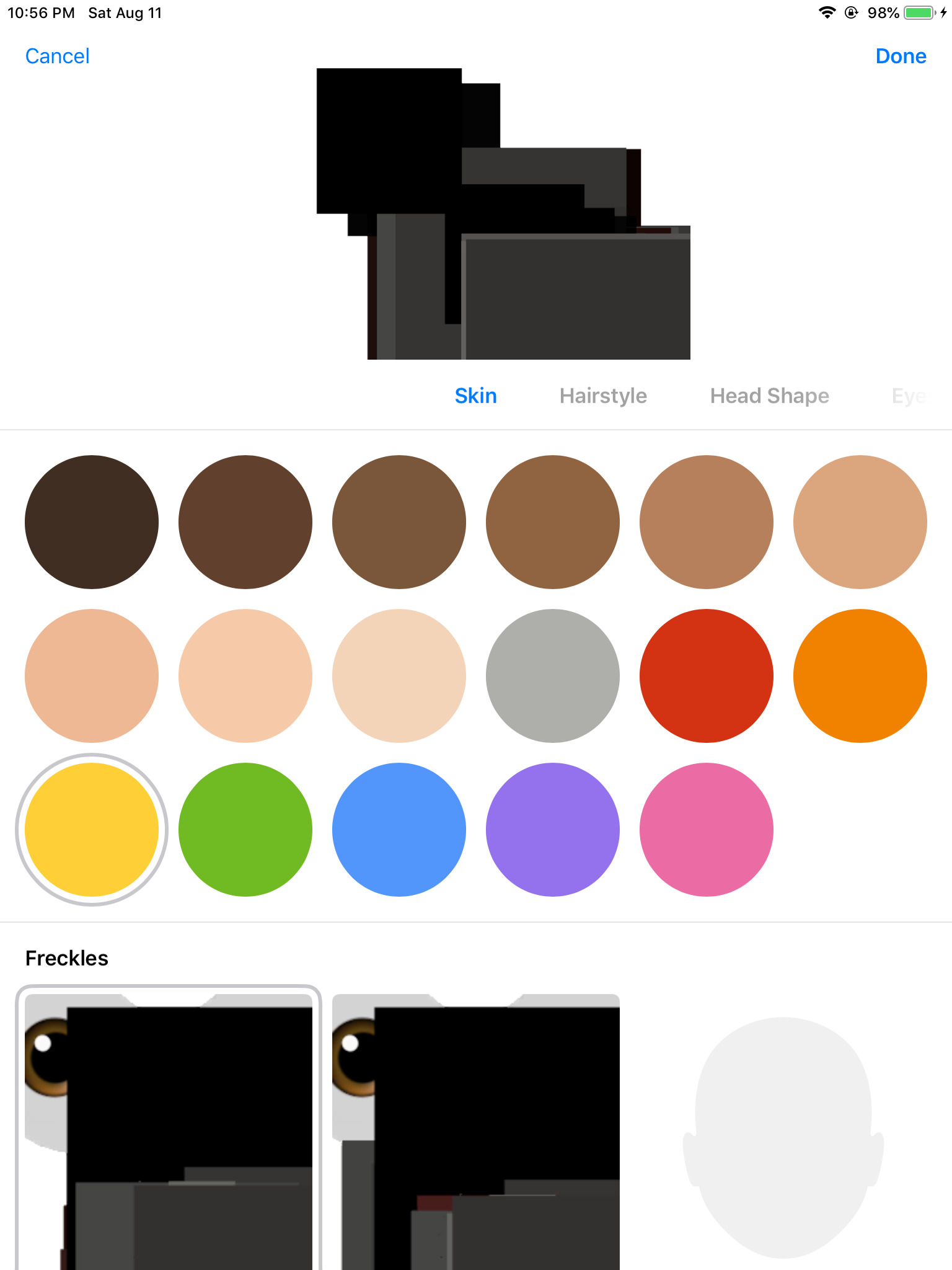

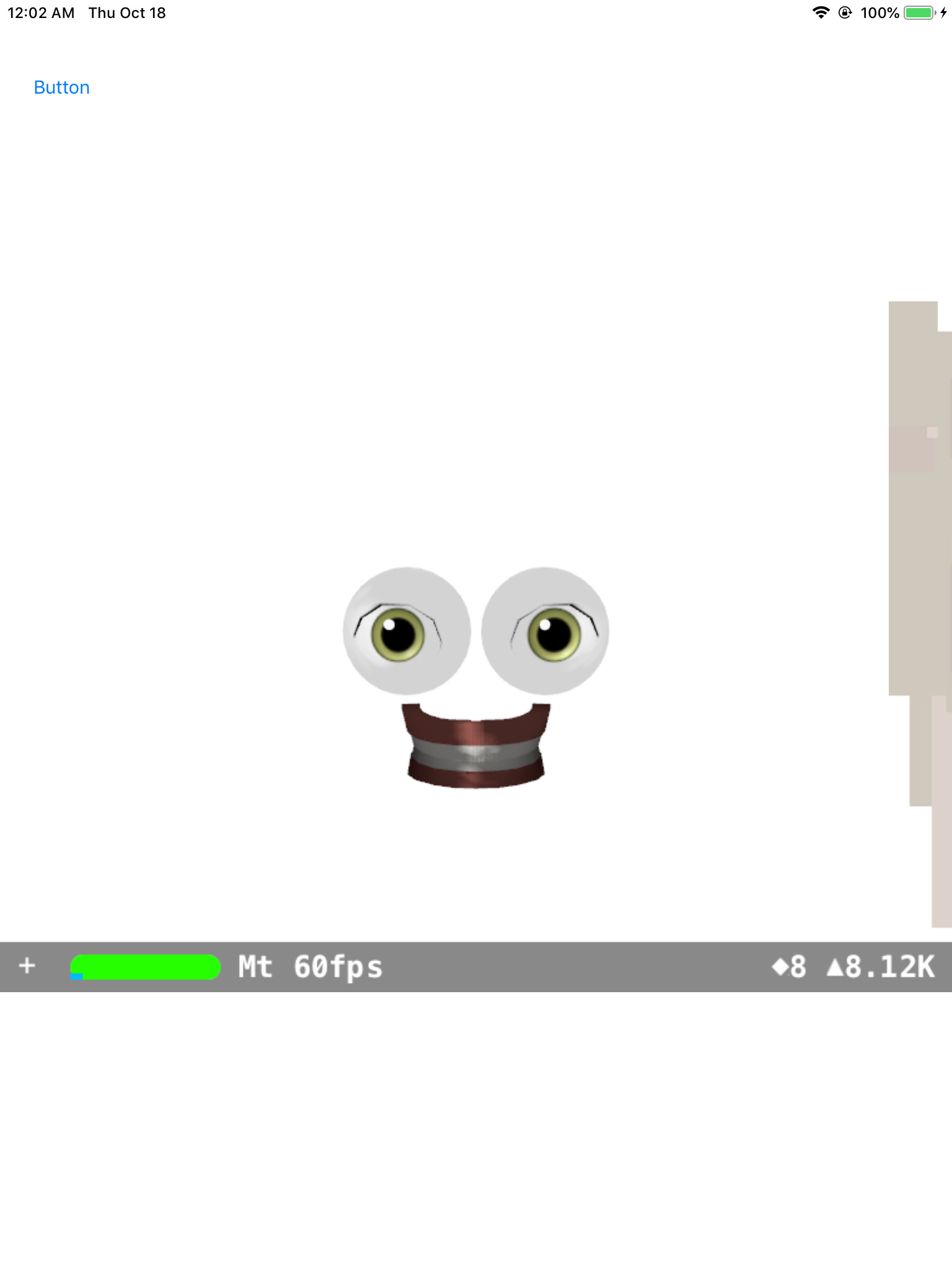

Except the new Memoji. They’re the stuff of nightmares:

Clearly, the iPad Air’s GPU can’t render Animoji properly.

But Animoji aren’t known for their looks: they’re interesting because they follow your real face’s every expression. Can the iPad Air track faces?

ARKit’s two face trackers: the hardware-accelerated tracker

I was surprised to find that ARKit supports not one, but two face trackers. The default tracker uses the public AVFoundation API to get tracking data from the TrueDepth camera.

Of course, this doesn’t work on an iPad Air. There is no TrueDepth camera, so Animoji prints this at startup:

Laurelmoji2[7880:1027380] [tracking] face tracking is not supported by this device

Oddly, there is a method in ARKit called ARCoreMediaRGBFaceTrackingEnabled. Does this allow running ARKit’s face detection with the front RGB sensor only?

I made the function return true, and also made the ARFaceTrackingDevice method return the regular camera instead of the TrueDepth camera. Then I started ARKit again:

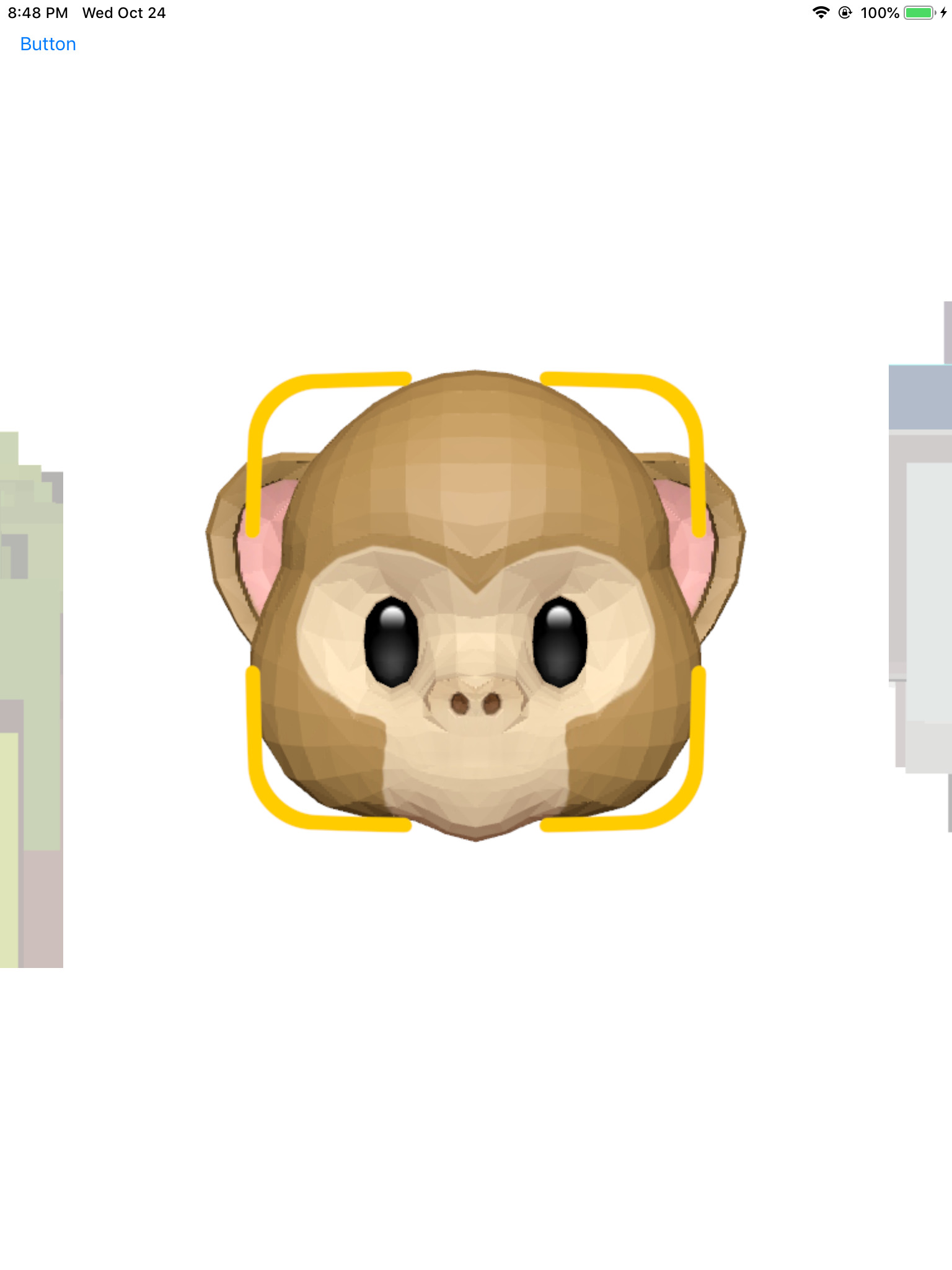

Hey, look! A yellow square showed up, just like on the real iPhone X when it can’t find a face. Unfortunately, the square never goes away, and no face is ever detected. Why?

It turns out ARKit’s default face tracker doesn’t do any face detection itself: it just asks AVFoundation (iOS’s camera API) to detect faces using the undocumented metadata type AVMetadataObjectTypeTrackedFaces. This data is then sent, along with the video and depth data, to ARKit.

Putting a breakpoint shows that -[ARFaceTrackingImageSensor capturedSynchedOutput:didOutputSampleBuffer:fromVideoConnection:metaDataOutput:didOutputMetadataObjects:didOutputDepthData:atTime:], the method that receives face detection events, is never called. There’s two hints for why this occurs:

-

An examination of iOS didn’t turn up any code that detects faces for this metadata type.

-

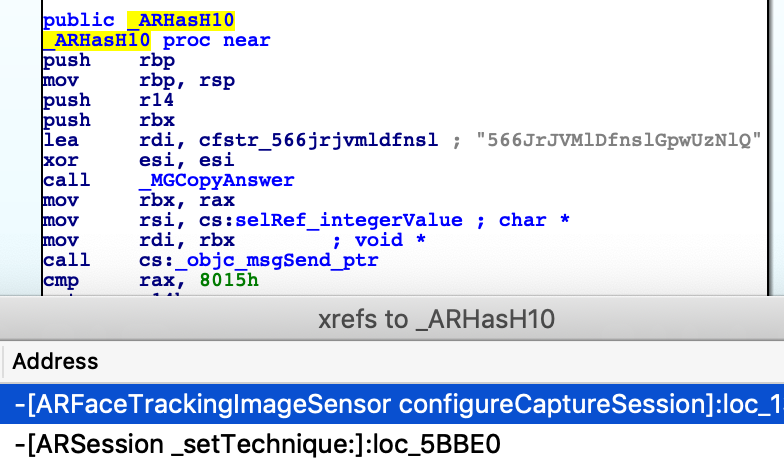

ARKit checks if the device has an A11 Bionic CPU (used in iPhone 8/8 Plus/X) before enabling face detection:

Note the check for 8015, the internal model name of the A11 Bionic.

These pieces of evidence show that the face detection is not performed with code in iOS, but with hardware unique to the A11 Bionic chip, such as its Image Signal Processor. If that’s true, then the iPad Air could never run this face detector, because it simply doesn’t have the hardware.

What about the other tracker?

The internal tracker

In the Animoji code, there’s an interesting method, +[AVTFaceTracker setUsesInternalTrackingPipeline]. What’s the Internal tracking pipeline?

As it turns out, it’s a second, hidden face detector supported by ARKit. Instead of being activated with ARFaceTrackingConfiguration, it’s activated with ARInternalFaceTrackingConfiguration. Instead of asking AVFoundation for faces, ARKit handles detection itself using a framework called “FaceKit”, in AppleCVA.framework.

The contents of AppleCVA.framework reveal that this Internal tracker is powered by CoreML:

$ pwd

/Volumes/PeaceBSeed16B5068i.D331DeveloperOS/System/Library/PrivateFrameworks/AppleCVA.framework/resources_facekit

$ ls

MetaData.json correction_features-d3x-n84.espresso.weights rnn_features-d3x-n84.espresso.net

blendshape_indices.binary correction_regression.plist rnn_features-d3x-n84.espresso.shape

blendshape_sizes.binary facekit_resources.dat rnn_features-d3x-n84.espresso.weights

cnn_features-d3x-n84.espresso.net faces.binary rnn_regression.plist

cnn_features-d3x-n84.espresso.shape failure_regression.plist rnn_tongue_features-d3x-n84.espresso.net

cnn_features-d3x-n84.espresso.weights id_fitting_rgb_only rnn_tongue_features-d3x-n84.espresso.shape

cnn_regression.plist id_fitting_rgbd rnn_tongue_features-d3x-n84.espresso.weights

contour.binary landmarks.binary tensor.plist

correction_features-d3x-n84.espresso.net memory.binary tongue_regression.plist

correction_features-d3x-n84.espresso.shape rnn_concat.plist

The .net, .shape, and .weights files matches the format of compiled CoreML networks.

Since this is a face tracker that does run directly in iOS, using a public framework, without requiring dedicated hardware or firmware, it should work on the iPad Air, right?

Laurelmoji[7945:1032737] [espresso] [Espresso::handle_ex_plan] exception=Error creating mps kernel: q=0

Laurelmoji[7945:1032737] FaceKit: <ERROR> failed creating regressor

Laurelmoji[7945:1032737] FaceKit: <ERROR> couldn't load identity fitting CNN

Laurelmoji[7945:1032737] FaceKit: could not initialize.

Whoops, I forgot: while CoreML itself is supported on the iPad Air, GPU acceleration for CoreML doesn’t work on iPad Air, as it doesn’t support Metal Performance Shaders. The Internal tracker depends on GPU acceleration, and thus won’t run.

The old Vision.framework tracker

OK, so ARKit’s face trackers won’t work on iPad Air. However, iOS includes another tracker: VNDetectFaceLandmarksRequest in Vision.framework. Even better: Apple provides a sample app that shows how to track a face using the front-facing camera with this framework.

Unfortunately, there are three issues with this face tracker:

- It is slow. The tracker can barely run at 5 fps on my iPad Air.

- It is inaccurate. The detection often misaligns the facial features or just doesn’t detect my face altogether.

I’ve seen other AR face trackers work much better and faster (~10-15 fps), on the same device and the same resolution (720p), so that’s no excuse.

but perhaps most importantly, for my project:

3. Like most face trackers, but unlike ARKit, Vision.framework outputs detected facial features as raw points, not blend shapes.

That’s a problem, because ARKit only animates characters with blend shapes.

How Animoji are animated

ARKit outputs two types of data for a tracked face:

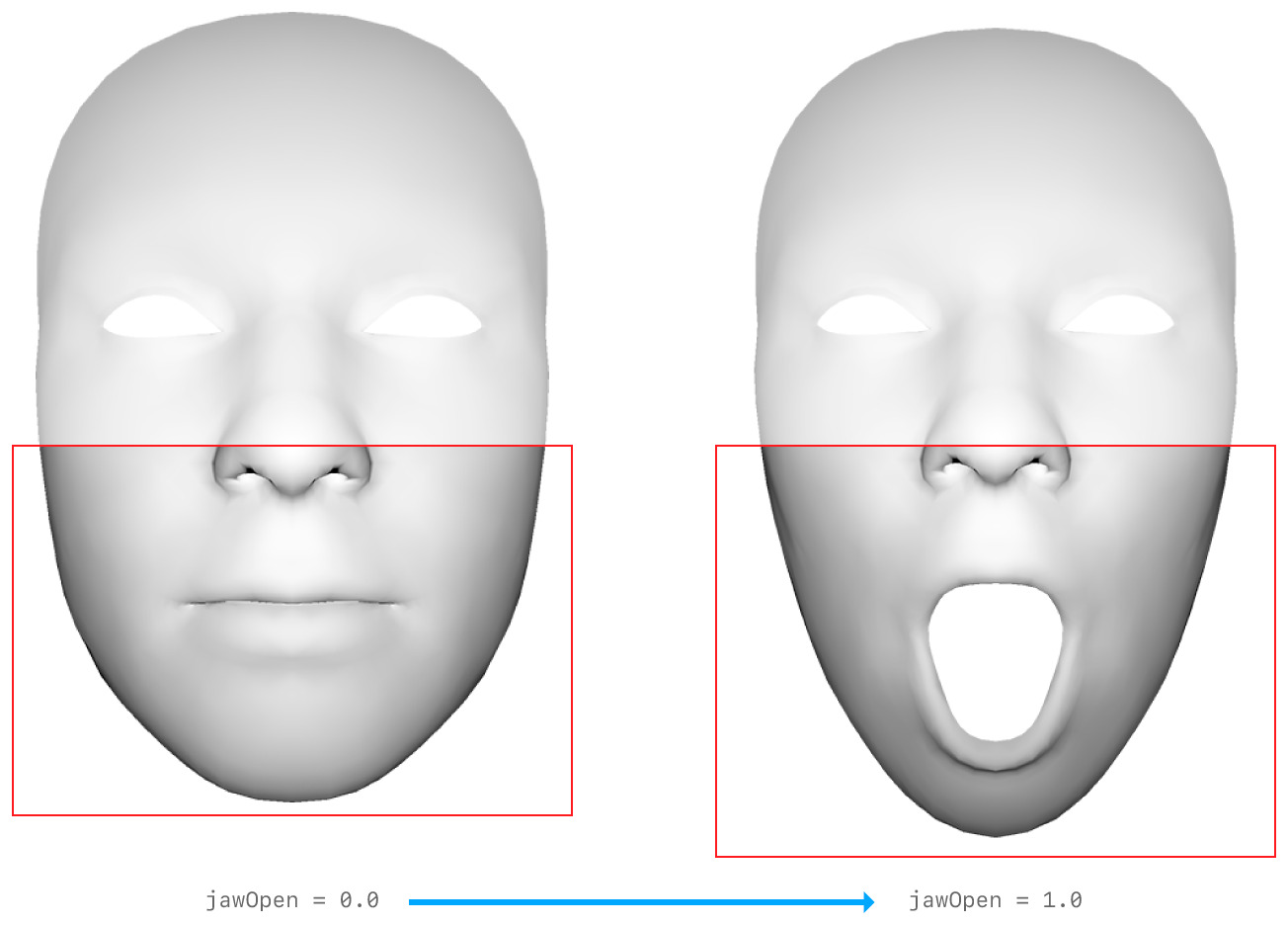

- A set of Blend Shapes, showing the user’s facial expression as a set of numbers. one for each facial feature.

For example, ARBlendShapeLocationJawOpen show how much the mouth is open:

(source: Apple documentation)

- and a 3d polygonal mask generated by taking a generic face shape and deforming it using the blend shapes.

Surprisingly, Memoji only uses the blend shapes, not the polygons.

On one hand, that means I don’t have to convert the points from the Vision.framework tracker to polygons to match ARKit. On the other hand, I do need to calculate each blend shape from the Vision.framework. By hand.

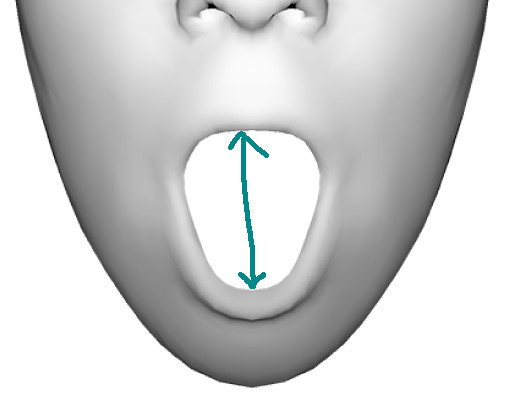

That can’t be that hard, right? To calculate jaw open, just measure from the top lip to the bottom lip.

Easy.

To calculate mouth pucker, just measure from the… left corner of the mouth? To the right corner, I guess but what about when the user opens their mouth? Then there aren’t any corners and anyways puckering one’s mouth looks different with the mouth open and…

Wait, how many of these blend shapes are there?

52?

OK, I can’t calculate that many parameters. (At least one reader is extremely disappointed.)

In the end, I only managed to calculate two parameters: jaw open and distance from camera. I’ve recorded a short video of what Animoji are like with only two tracked blend shapes: the result was… terrible.

Challenge

So this proves quite conclusively that Animoji, with full tracking and graphical quality, will never work on my old iPad Air.

However, there is one device that uses the A11 Bionic chip, like the iPhone X, and has the exact same CPU and GPU: the iPhone 8.

I challenge you to prove me wrong by porting Animoji to the iPhone 8. My source for this experiment is available on GitHub.

What I learned

- There’s not one, but two face tracking pipelines in ARKit.

- Apple uses CoreML in their own apps, unlike some of their other frameworks (cough WatchKit cough)

- Samsung and Apple made different trade-offs in their respective AR avatar functionalties

- The local symbols from the dyld cache are very useful for patching programs

- Using Swift code from Objective-C

- Using Swift to read local symbols from the dyld cache

- Face tracking is hard

- Never trust everything you read in a phone review

What I want to learn next

- How do you reverse engineer iOS frameworks inside a Dyld Cache? I’ve tried so many tools and spent so much time, but I still can’t extract a framework that preserves method names and Obj-C selectors…